PersonLab复现过程

我打算记录整个复现的心路历程,和代码实践遇到的问题 正在写完这一行字的我, 什么代码都没写~ 所以以下的文字是一篇

记叙文, 默认的叙述手法是顺序

选个复现思路: - 自上而下: 从train.py的main(),逐渐构建所需要的函数以及其子函数 - 自下而上: 从每个最小的函数(如从读入数据集,预处理图像,构建标签)开始,逐渐向上封装函数

我倾向于后者:

我先想清楚了整个项目需要哪些模块, 然后创建了如下的几个空文件:

backbone_network.py, label_constrction.py, data_augmentation.py , data_iteration.py, loss.py, evaluate.py, greedy_decoding.py, instance_association.py, model.py , train.py

空文件先创建好,刺激你去复现,后面遇到问题再改

从label_construction.py 开始

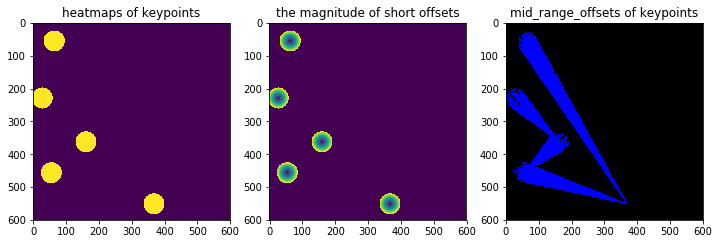

PersonLab 最精华的部分应该是如何利用COCO给定的标签信息, 利用人类的直觉和知识重新加工成几何上的监督信息, 即heatmaps,short-range offset,hough_socre_maps,mid-range pairwise offset, long-range offset,persons_mask. 所以此部分也是复现的关键步骤.

对了, 有一点需要强调, 复现前要仔细阅读论文的实验部分(Experiment)的描述.我读了一些关键的描述语言, 后面如果涉及到再详细说明

继续

coco keypoint detection task 数据集提供的标签格式是.json, 举个例子,person_keypoints_val2017.josn其中包含info和annotation,

info负责提供数据集中每张图像的image_id,file_name,height,widthannotation负责提供标注信息,其中的多个样本可能来自于同一张图像(多人问题嘛),每个样本包括如下:我们的目标就是找到每张图像中所有样本,并且主要根据他们的1

2

3

4

5

6

7

8{"segmentation": [[76,46.53,省略,31.03,99,省略,46.03]],

"num_keypoints": 15,

"area": 2404.375,

"iscrowd": 0,

"keypoints": [102,50,1,0,0,0,101,46,2,0,0,0,97,46,2,82,44,2,91,49,2,97,43,2,109,66,2,112,43,2,128,73,2,71,74,2,76,79,2,94,65,2,110,81,2,84,90,2,129,99,2],"image_id": 149770,

"bbox": [65,31.03,81,77.5],

"category_id": 1,

"id": 427983}keypoints,segmentation的坐标信息来构造上面提到的监督信号,bbox的坐标其实就不需要了.

以下论文提到的两点请注意:

在利用segmentation的时候,需要注意,作者提到了关于处理特殊情况的操作: > we back-propagate across the full image, only excluding areas that contain people that have not been fully annotated with keypoints (person crowd areas and small scale person segments in the COCO dataset)

这意味着我们要考虑iscrowd==1的情况,我们在进行loss计算时,要把iscrowd==1 的区域mask掉.

此外, 在论文的Imputing missing keypoint annotations章节,作者说明: >The standard COCO dataset does not contain keypoint annotations in the training set for the small person instances, and ignores them during model evaluation.However, it contains segmentation annotations and evaluates mask predictions for those small instances. Since training our geometric embeddings requires keypoint annotations for training, we have run the single-person pose estimator of [G-RMI] (trained on COCO data alone) in the COCO training set on image crops around the ground truth box annotations of those small person instances to impute those missing keypoint annotations.

因为暂时不打算用另外一个模型预测小尺寸图像的keypoints,这里我们直接忽略掉小尺寸的instance segmentation

1 |

|

<generator object get_coco_annotations at 0x0000024759D61518>上面是构造了一个生成器, 迭代产生(img, keypoints_skeletons, instance_masks) 这样的元组.

接下来要做的事,就是如何把最原始的标签重新加工编码成一个更加几何化的监督表示.

我们要明确一点, 论文中构造的偏移向量的表示都是在原图像尺寸的量化精度下的, 而不是神经网络直接输出的feature map的尺寸, 因为network的输出已经被降采样了, 所以不论是预测出的heatmap,还是offsets都被上采样,来还原到原图的尺寸精度上.

所以我们编码用来监督的表示时,需要参考原图的高度和宽度.

根据关键点的位置构造以关键点位置为中心,半径为r的圆形disk区域,区域内的取值为1,区域外的取值为0

最直接的做法是,遍历heatmap的每个位置,通过计算距离来判断是否落在某个关键点的半径范围内,但这样的时间复杂度为O(H*W) 而通过数学的直观角度,大部分的区域都是disk外的,有没有更快捷的方法,这里我采用的是:

引入高斯核的技巧,即在每个关键点的位置生成高斯分布的函数,其分布满足中心对称,那么通过设定阈值(半径处取值),大于阈值设置为1,小于阈值设置为0

高斯核的技巧,避免了heatmap上所有位置的遍历,此处我用了opencv带的cv2.GaussianBlur()函数,这个实际上也是个滤波器,也包含遍历,但其复杂度为

O(H*W)但其函数通过openc库实现。(是否需要考证一下这种方法的计算速度?毕竟滤波器遍历了整个heatmap。有没有更直接的指定位置插入高斯核的现成的函数?)

1 | def disk_mask_heatmap(one_hot_heatmap,radius): |

上述方法可以解决了生成disk的难题,但是接下考虑构造short-range offset时,必须找到在关键点周围半径内的位置计算偏移。这种功能要求代码,必须给定一个关键点位置,就可以获得其周围对应的位置。这个需求也就是:在产生指定圆的时候,同时记录其圆内所有像素的位置和像素相对于圆心的偏移。

但是上面的代码不是逐个处理关键点的方法,而是一次性生成的,所以不能够实现上面的需求

获取heatmap上位置索引的技巧,比如,给定一个HxW大小的heatmaps

1 | import numpy as np |

(3, 3, 2)

[[[0 0]

[1 0]

[2 0]]

[[0 1]

[1 1]

[2 1]]

[[0 2]

[1 2]

[2 2]]]

-1这就可以得到了heatmap每个位置的地址索引. 继续考虑如何构造disk区域。 ## 获取每个关键点影响周围的disk区域

1 | def get_keypoint_discs(all_keypoints,map_shape,K=17,radius=4): |

返回的discs,包含K个heatmap,其中每个heatmap对应一种类型的人体关键点的所有人体的位置,每个位置的周围disk内的所有像素的位置的索引。进而达到了功能的需求。

进而我们可以设计这样的函数,根据所有人体的所有关键点的位置集合,

- 获取每个关键点位置周围的disk区域,进行赋值,得到heatmaps

- 获取每个关键点位置周围的disk区域内每个像素的位置索引,与中心位置在x,y方向上作差,得到short offsets

- 获取起始关键点位置周围的disk区域内每个像素的位置索引,用终点关键点的位置于这些像素位置的索引作差,得到mid-range offsets

1 | import numpy as np |

keypoints coordiantes:

[[[ 28 228 1]

[161 361 1]

[ 55 455 1]

[ 64 54 1]

[368 550 1]]]

pair wise keypoints:

[[0, 1], [1, 2], [2, 4], [3, 4]]

[[[ 28 228 1]

[161 361 1]

[ 55 455 1]

[ 64 54 1]

[368 550 1]]]

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).